Harmonic Co-clustering Heatmap

(→Hierarchical Partition Tree) |

(→Hierarchical Partition Tree) |

||

| Line 5: | Line 5: | ||

The geometrical structure of a high dimensional data cloud is assumed to be captured by a finer and finer hierarchical partition tree in different levels. Different levels represent different resolutions of the dataset. The bottom level of the tree is comprised with N (number of points) folders each consists a single member from N elements. The top level of the hierarchical tree is comprised with one folder which is the combination of all elements. In the middle levels, folders are combinations of elements from lower levels. All those folders are obtained by applying a simple heuristic k-means clustering on lower level data points. Each folder is denoted as a new data point by a linear combination of the all elements that make up the folder. | The geometrical structure of a high dimensional data cloud is assumed to be captured by a finer and finer hierarchical partition tree in different levels. Different levels represent different resolutions of the dataset. The bottom level of the tree is comprised with N (number of points) folders each consists a single member from N elements. The top level of the hierarchical tree is comprised with one folder which is the combination of all elements. In the middle levels, folders are combinations of elements from lower levels. All those folders are obtained by applying a simple heuristic k-means clustering on lower level data points. Each folder is denoted as a new data point by a linear combination of the all elements that make up the folder. | ||

| − | [[Image: | + | [[Image:Hierarchical_Partition_Tree.png]] |

== K-means Clustering == | == K-means Clustering == | ||

Revision as of 21:48, 15 November 2012

Contents |

Introduction

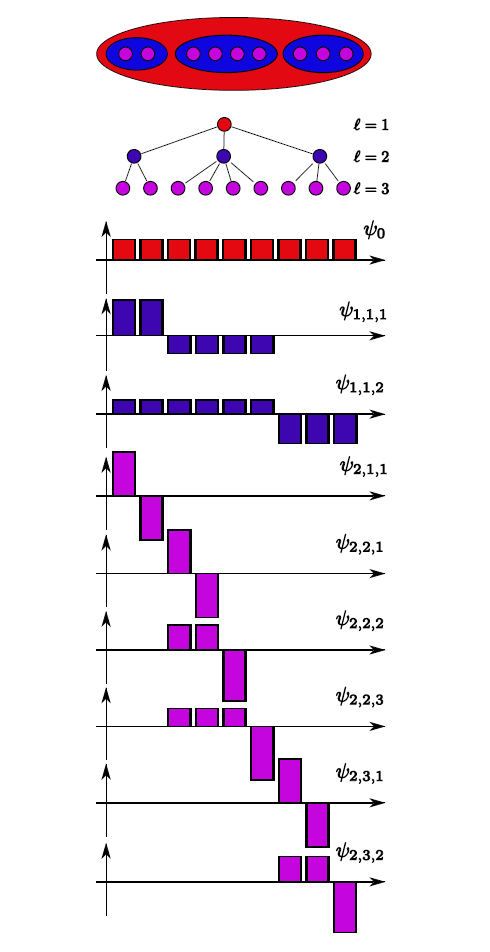

Co-clustering is a data mining technique that clusters both rows and columns of a data matrix simultaneously. It looks for clusters of similar objects and correlated features that distinguish groups of objects. Harmonic co-clustering is a co-clustering method that achieves clusters in both row space and column space, block structures of a data matrix based on discrete harmonic analysis. Harmonic basis are induced from a constructed coupled geometry of the two spaces. We obtain the coupled geometry by taking the tensor product of local geometry which is captured by a hierarchical partition tree in both row space and column space. Data set is smooth and block structure is obtained with respect to low entropy of expansion coefficients on data matrix. The algorithm proceeds in an iterative way that each local geometry is constructed on the update version of geometry of the other space. By iteratively doing this, the data set become more and more compact and smooth each time. The entropy of expansion coefficients will converge to a low value as thresholdded.

Hierarchical Partition Tree

The geometrical structure of a high dimensional data cloud is assumed to be captured by a finer and finer hierarchical partition tree in different levels. Different levels represent different resolutions of the dataset. The bottom level of the tree is comprised with N (number of points) folders each consists a single member from N elements. The top level of the hierarchical tree is comprised with one folder which is the combination of all elements. In the middle levels, folders are combinations of elements from lower levels. All those folders are obtained by applying a simple heuristic k-means clustering on lower level data points. Each folder is denoted as a new data point by a linear combination of the all elements that make up the folder.

K-means Clustering

K-means is one of the simplest unsupervised learning algorithms that solve the well known clustering problem. The procedure follows a simple and easy way to classify a given data set through a certain number of clusters (assume k clusters) fixed a priori. The main idea is to define k centroids, one for each cluster. These centroids shoud be placed in a cunning way because of different location causes different result. So, the better choice is to place them as much as possible far away from each other. The next step is to take each point belonging to a given data set and associate it to the nearest centroid. When no point is pending, the first step is completed and an early groupage is done. At this point we need to re-calculate k new centroids as barycenters of the clusters resulting from the previous step. After we have these k new centroids, a new binding has to be done between the same data set points and the nearest new centroid. A loop has been generated. As a result of this loop we may notice that the k centroids change their location step by step until no more changes are done. In other words centroids do not move any more. Finally, this algorithm aims at minimizing an objective function, in this case a squared error function.

Coupled Geometry

Based on the MSTs, Earth Mover's Distance(EMD) method is used for MSTs and modules to see how each MST fits each module. If the value is large, it means the MST can well present the module progression to some extent. For this step, just click on EMD button when available, it will match all MSTs and modules. The running state is updated in command window.

Heatmap

Once the matching among MSTs and modules has finished, a similarity matrix for modules is built. PSM threshold, progression similarity matrix threhold, is set to determine whether two modules are similar. The similarity is one if the module is matching with its own MST. It approaches zero if the MST doesn't fit the module at all. "Selecting percentage" is the percentage of the values higher than the threshold in the similarity matrix. This param depends on the way you trust the similarity judgement. If you trust the similarity value more, just focus on the threhold; If you trust the percentage more, set the threhold so as to let the percentage fall in the range you would like it to. Click Show PSM, a heatmap would pop out for you to select the similar modules. The heatmap is colored from red to blue. Dark red represents high values and dark blue low values. It's symetric so it's recommended to select along the diagonal by left button down clicking on the left-up corner starting block and left button up clicking on the right-down corner ending block. All the blocks in this symetric square will be chosen and their corresponding module IDs will be filled in the "Input hand-picked modules".

Recommended param setting for :

Set the threshold so that the percentage is 0.2 to 0.3.

Progression Tree

Beyond the auto-filling module IDs by right clicking on the heatmap, you can also edit them yourself. If you want to add IDs, make sure they are seperated by comma. After these are all set, click View Progression, the final progression tree is built. You can select the nodes and view the corresponding items in other views. If the samples have been clustered, a vertex represents a cluster with its size number shown near the vertex.

Progression Heatmap

Progression Heatmap is built from the progression tree. Its row order is the tree node order, and its column order is the feature cluster order, the corresponding hierachical clustering dendrogram is shown above the heatmap. The heatmap is colored based on the normalized feature values. When you click vertex in the progression tree, the corresponding rows and selected feature columns will be selected.