Validation Methods

Contents |

Validation, Performance Assessment, & Quality Control

Validation: This is the process of building sufficient confidence in the user that the results of automated segmentation/registration are valid for a specific intended use. Visual inspection and confirmation of correctness, and the absence of significant flaws is a form of qualitative validation. Qualitative validation can be thought of as a user certifying that the results of automated analysis are good enough for a certain purpose. It relies on human judgement. In contrast, quantitative validation is based on some form of performance assessment, and setting of a threshold on some performance criteria. It means that the results meet certain performance criteria, such as sufficiently low bias, and variance (more below).

The nature of the intended use (application context) has a strong influence on validation. For example, an algorithm can produce highly accurate results over 99% of the image region, but fare badly on 1% of the region. If this small region happens to be unimportant biologically/medically, then it is fair to consider the results valid. However, if this is the most important region, then the opposite conclusion holds. For example, in retinal image analysis, segmentation/ registration errors in certain regions of an image can have a disproportionate impact in some applications (e.g., the macular region of a retinal fundus image is far more critical compared to the retinal periphery), implying that simplistic performance criteria computed over the whole image can be naive.

Performance Assessment: This is the process of quantifying the performance of a segmentation/ registration algorithm using one or more performance measures. A variety of performance measures are in use (e.g., bias and variance), but there is no universally accepted best measure. A collection of performance measures constitutes a multivariate performance profile. We are interested in performance assessment on an individual basis, as well as comparative assessment of results from multiple alternative algorithms or the same algorithm under alternative parameter settings. We are interested in validation and performance assessment methods for detection, segmentation, classification, and change analysis algorithms. We are also interested in methods that are practical to use, scalable, and requiring minimal manpower compared to traditional methods.

Classical Validation Methods

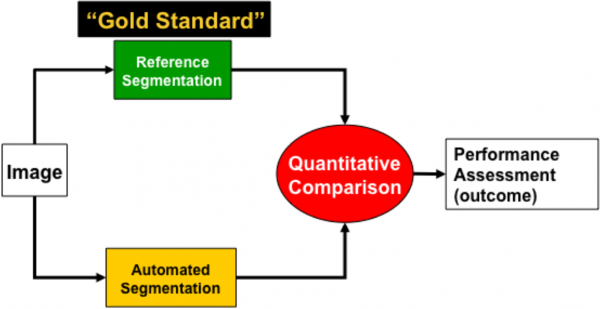

The classical “bedrock strategy” for validating automated image analysis algorithms is to compare their results to pre-stored ground truth data that is known a priori to be accurate and valid. In other words, the ground truth data serves as the gold standard for evaluating the automated results. The first step to comparing automated results with ground truth is to examine them visually in a manner that preserves their relative context. To meet this universal need, the FARSIGHT system includes tools for overlaying segmentation results over the raw image data.

Two such cases are listed below:

Synthetic Phantom Data

One widely-used approach to ground truth generation is to create a phantom image (or image sequence) of a man-made calibrated object with accurately known parameters and perturbations. This type of "benchtop testing" is helpful for controlled evaluation of some aspects of image analysis algorithms, e.g., performance as a function of signal-to-noise ratios, choice of settings, and morphological characteristics of objects in a tightly controlled manner. For example, one can make sure that the algorithm produces the correct result when zero noise or other perturbations are applied. Next, one can study performance degradation (increase in discrepancies compared to the phantom data) as increasing levels of noise and perturbations are added. This can be quantified by computing a measure of discrepancy (e.g., bias and variance) between the correct answer and the answer produced by the automated algorithm. This type of study can be readily extended to the task of comparing two or more algorithms under identical conditions. Synthetic data based validation is most commonly used as new algorithms are being developed, or refined. Unfortunately, however, phantom-based validation has innate limitations because man-made objects rarely reflect the full biological complexity of real tissue.

Data from Physical Phantoms

At the next level of sophistication, one can place precisely fabricated (calibrated) man-made objects under the microscope (e.g., fluorescent microbeads, dye-filled micro-pipettes, etc.) and record images of them. The resulting images are a little more realistic and less tightly controlled compared to the synthetic data case, and is often helpful in developing a better understanding of algorithm strengths and weaknesses.

Real Biological data

A practical strategy for validating automated image analysis results for real data is to use the currently best-available “gold standard”. In this regard, the human observer continues to be the most widely accepted and versatile gold standard since the human visual system remains unbeatable for visual tasks. In other words, image analysis systems are attempting to automate tasks that have traditionally been carried out by humans. The human visual system takes up roughly 2/3 of our brain's computing capacity, and is a formidable competitor. This is not to imply that the human observer is perfect – this is far from true. It has significant weaknesses as well. The human generated gold standard is subject to errors from at least two sources – inter-subject variations, and intra-subject variations. The former are understandable – individuals vary greatly in their manual image analysis abilities. The latter are surprising to many – the same individual may produce different results on two different days for the same image. Many human factors, including the level of attentiveness, fatigue, habituation, and focus cause variations in results.

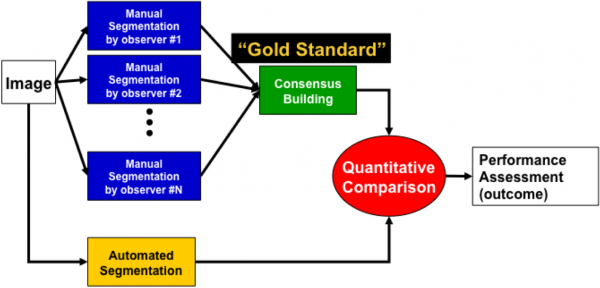

One approach to overcome inter-subject errors is to hand the image to multiple independent observers, say N of them, who analyze the image without consulting each other, and produce a set of results – we denote them H1,H2,H3,.... Any protocol that uses multiple observers is termed “multi-observer validation”, and is de rigueur for good quality work. One can imagine many ways to compare the automatically generated results against the set of multiple observer results, and several approaches have been described in the literature. The main difficulty is the handling of conflicting assessments. For example, one observer may count a cell whereas another may not, and have a well-reasoned justification for doing so.

One practical protocol for multi-observer validation that we have used with great success is to convene a meeting the observers and request that they discuss their differing assessments and arrive at a multi-observer consensus, denoted H * that is compared against the automated result. Keep in mind that human consensus building is a very non-linear process that is difficult to model mathematically. However, our experience (aided by common sense) indicates that the multi-observer consensus represents one of the best-available “gold standards” today. We find this to be superior to comparing the automated algorithm to the individual human generated results H1,H2,H3,..., and computing aggregate performance metrics.

Limitations of the Multi-observer Gold Standard

The above methodology is widely used and accepted, and represents a practical way to address issues such as subjectivity and inter-subject variations. However, it has several practical limitations that are worth considering. A major limitation is cost (since manpower is generally expensive), and slowness. It cannot be scaled directly to large-scale and/or high-throughput studies. In other words, it is impractical to generate a sufficiently rich and diverse set of gold standard results. A practical impact of this limitation is that algorithm developers can easily fall into the trap of optimizing their algorithms to the few gold standards that are available. Such algorithms can fail miserably when applied to new datasets although their performance on the gold standards may be attractive. Biological images have amazing diversity and variability, so this type of situation is indeed common.

A second major limitation of multi-observer validation as outlined above is that it does not help us perform quality control over actual real-world datasets that are processed by the automated algorithm. Arguably, this is he most germane and important issue in a real-world application, since the answer to this question determines whether or not the biological investigator chooses to use the automated analysis results for his/her biological investigations. Practically speaking, the professional goal of image analysts is to develop algorithms and software that are actually adopted by biological researchers in their scientific investigations. This has remained a challenge even after many years of progress in the field of automated algorithms. It is fair to state that overcoming the “adoption barrier” remains one of the greatest challenges for computational image analysts. This is an issue with surprising complexity that includes not only objective factors such as measurable performance of algorithms, but also subjective and cultural factors that are difficult to document precisely. These experiences have motivated us to develop the set of validation protocols described next.

Edit Based Validation

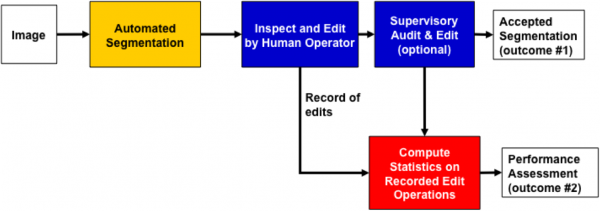

The “edit based validation” protocol is a streamlined method designed for practical operational use for evaluating the quality of an automated image analysis result for an image on which the automated algorithm has not been run before. This methodology is appropriate for mature algorithms that are expected to produce results with a high accuracy (typ. in the 90 – 100% range). This expectation can either be set by a previous multi-observer validation, or by a quick visual survey of the results. In this case, it makes sense to edit the automated results to correct the few errors that remain. The end result is a set of results that are acceptable to the biological investigator. This is not to imply that the results accepted by the user are altogether error free. However, they are usable for biological investigations of interest. It is simple to protect against subjectivity on the part of the user by allowing editing by multiple observers (in sequence or as a consensus group). While many such protocols are possible in theory, we have found he following protocol to be most useful. A supervisor can oversee/audit the edits made by a junior member of the laboratory, and make additional edits as needed (or undo the junior member's edits). The end result can be thought of as an operational "gold standard" for algorithm evaluation.

A reasonable expectation in this regard is that the effort required to edit will scale with the error rate of the automated algorithm, rather than the size/complexity of the image. For instance, if the algorithm’s performance improves from 90% to 95%, the user’s effort is halved. This implies that edit-based validation can be an operational validation tool that is quite scalable in principle. We are finding this method to be effective in practice as well.

Interestingly, the edit based protocol yields two outcomes, not one. In addition to the corrected results that are directly usable by the user, we obtain a second outcome that is valuable to algorithm developers. Specifically, we record the trail of user edit operations. This trail (edit trace) reveals the deficiencies in the automated algorithm in great detail. The number of edits is a direct measure of algorithm accuracy. The nature of errors is reflected in the distribution of error types. In fact, recording of edits implements some aspects of Good Automated Laboratory Practices.

Making it Even Faster: Cluster Editing

The edit based validation protocol is much faster compared to the classical multi-observer method, but is nevertheless amenable to substantial improvement. One idea being pursued in the FARSIGHT project is Cluster Editing. Basically, if we can identify a group of similar objects that require the same editing operation (e.g., delete), then the operation can be implemented en masse over the entire group. In theory, if there are N possible segmentation errors, then it could take as few as N cluster edit operations. This number can be dramatically smaller than the number of objects that need editing.

Making it Smarter: Pattern Analysis

The idea of cluster editing is elegant in principle, but requires a method to identify groups of similar objects. Cluster analysis is a well-developed subject in pattern analysis theory. Whenever objects are segmented, it is possible to compute a set of features for each objects. These features are the 'grist' for pattern analysis algorithms. Even the simplest forms of pattern analysis have proven to be invaluable. For example, outlier detection algorithms highlight all objects that have unusual features compared to the overall population. Grouping algorithms can identify subsets of objects with similar features. Leaning algorithms can learn from previous editing operations and generate editing suggestions for the user. The possibilities are endless.

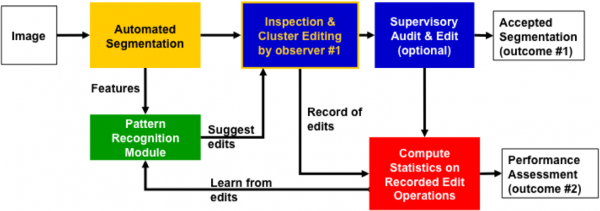

Putting these Ideas Together: PACE

PACE stands for pattern analysis aided cluster editing. It brings together the ideas described above, and forms the basis for validation in FARSIGHT. The following diagram illustrates PACE graphically.

The idea here is to exploit the power of features and pattern analysis algorithms. Even the simplest forms of pattern analysis have proven effective. For example, outlier analysis reveals all objects that are either unusual or incorrectly segmented. The record of edits is a valuable input to learning algorithms. Edits are applied to groups of objects at a time - we call this cluster editing. This leads to a dramatic reduction in effort needed to inspect and validate segmentation algorithms.

Workflows for Validation, Performance Assessment, & Quality Control

Workflows are standardized & efficiently-designed procedures for laboratory staff to follow. The ideal workflow that we strive for is one in which there are exactly N operations for a segmentation in which N types of errors are possible. In practice, it requires more operations than that, so the question is just how closely can be approach the ideal workflow? This is the subject of ongoing research.