Validation Methods

| Line 15: | Line 15: | ||

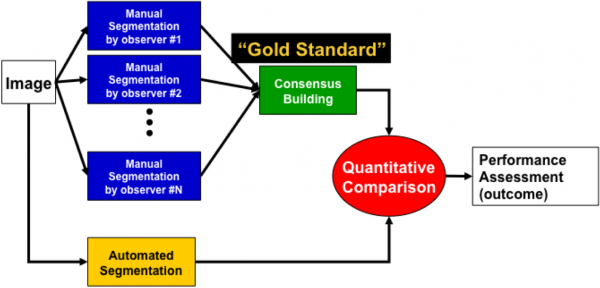

One approach to overcome inter-subject errors is to hand the image to multiple independent observers, say <math>N</math> of them, who analyze the image without consulting each other, and produce a set of results – we denote them <math>{H_1, H_2, H_3,...}</math>. Any protocol that uses multiple observers is termed “'''multi-observer validation'''”, and is ''de rigueur'' for good quality work. One can imagine many ways to compare the automatically generated results against the set of multiple observer results, and several approaches have been described in the literature. The main difficulty is the handling of conflicting assessments. For example, one observer may count a cell whereas another may not, and have a well-reasoned justification for doing so. | One approach to overcome inter-subject errors is to hand the image to multiple independent observers, say <math>N</math> of them, who analyze the image without consulting each other, and produce a set of results – we denote them <math>{H_1, H_2, H_3,...}</math>. Any protocol that uses multiple observers is termed “'''multi-observer validation'''”, and is ''de rigueur'' for good quality work. One can imagine many ways to compare the automatically generated results against the set of multiple observer results, and several approaches have been described in the literature. The main difficulty is the handling of conflicting assessments. For example, one observer may count a cell whereas another may not, and have a well-reasoned justification for doing so. | ||

| − | One practical protocol for multi-observer validation that we have used with great success is to convene a meeting the observers and request that they discuss their differing assessments and arrive at a multi-observer consensus, denoted <math>H^*</math> | + | One practical protocol for multi-observer validation that we have used with great success is to convene a meeting the observers and request that they discuss their differing assessments and arrive at a multi-observer consensus, denoted <math>H^*</math> that is compared against the automated result. Keep in mind that human consensus building is a very non-linear process that is difficult to model mathematically. However, our experience (aided by common sense) indicates that the multi-observer consensus represents one of the best-available “gold standards” today. We find this to be superior to comparing the automated algorithm to the individual human generated results math>{H_1, H_2, H_3,...}</math>, and computing aggregate performance metrics. |

| + | |||

| + | [[Image:MultiObserver.png |600px|thumb|center|Validation and Performance Assessment by comparing automatically generated results to multiple observer consensus based "gold standard"]] | ||

Revision as of 16:05, 14 April 2009

Validation is the process of establishing the validity of an automated algorithm. Closely related topics are performance assessment and quality control. In fact, these topics are closely related and must be considered together. Validation and performance are of utmost importance since a carefully validated algorithm with known (and acceptable) performance can be deployed with confidence in biological studies, and vice versa. In the FARSIGHT project, we are interested in validation and performance assessment methods for detection, segmentation, classification, and change analysis algorithms. We are also interested in methods that are practical to use, scalable, and requiring minimal manpower compared to traditional methods.

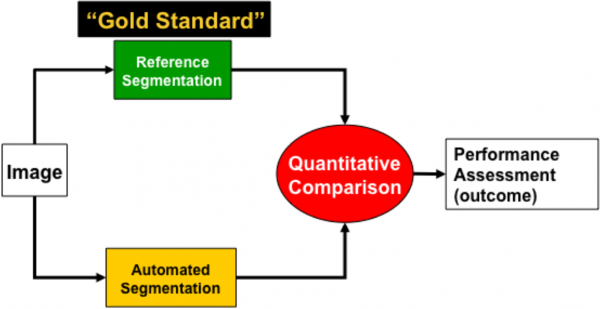

Classical Validation Methods: The classical approach to validation is to compare automated results to “ground truth data” that is known in advance to be an accurate result. Two such cases are listed below:

a. Synthetic Data: One approach to ground truth generation is to create a man-made phantom image (or image sequence) with known parameters and perturbations. This is helpful for evaluating several aspects of image analysis algorithms in a tightly controlled manner. For example, one can make sure that the algorithm produces the correct result when zero noise or other perturbations are applied. Next, one can study performance degradation (increase in discrepancies compared to the phantom data) as increasing levels of noise and perturbations are added. This can be quantified by computing a measure of discrepancy (e.g., bias and variance) between the correct answer and the answer produced by the automated algorithm. This type of study can be readily extended to the task of comparing two or more algorithms under identical conditions. Synthetic data based validation is most commonly used as new algorithms are being developed, or refined.

b. Data from Physical Phantoms: At the next level of sophistication, one can place precisely fabricated man-made objects under the microscope (e.g., fluorescent microbeads, dye-filled micro-pipettes, etc.) and record images of them. The resulting images are a little more realistic and less tightly controlled compared to the synthetic data case, and is often helpful in developing a better understanding of algorithm strengths and weaknesses.

c. Real Biological data: A practical strategy for validating automated image analysis results for real data is to use the currently best-available “gold standard”. In this regard, the human observer continues to be the most widely accepted and versatile gold standard since the human visual system remains unbeatable for visual tasks. In other words, image analysis systems are attempting to automate tasks that have traditionally been carried out by humans. The human visual system takes up roughly 2/3 of our brain's computing capacity, and is a formidable competitor. This is not to imply that the human observer is perfect – this is far from true. It has significant weaknesses as well. The human generated gold standard is subject to errors from at least two sources – inter-subject variations, and intra-subject variations. The former are understandable – individuals vary greatly in their manual image analysis abilities. The latter are surprising to many – the same individual may produce different results on two different days for the same image. Many human factors, including the level of attentiveness, fatigue, habituation, and focus cause variations in results.

One approach to overcome inter-subject errors is to hand the image to multiple independent observers, say N of them, who analyze the image without consulting each other, and produce a set of results – we denote them H1,H2,H3,.... Any protocol that uses multiple observers is termed “multi-observer validation”, and is de rigueur for good quality work. One can imagine many ways to compare the automatically generated results against the set of multiple observer results, and several approaches have been described in the literature. The main difficulty is the handling of conflicting assessments. For example, one observer may count a cell whereas another may not, and have a well-reasoned justification for doing so.

One practical protocol for multi-observer validation that we have used with great success is to convene a meeting the observers and request that they discuss their differing assessments and arrive at a multi-observer consensus, denoted H * that is compared against the automated result. Keep in mind that human consensus building is a very non-linear process that is difficult to model mathematically. However, our experience (aided by common sense) indicates that the multi-observer consensus represents one of the best-available “gold standards” today. We find this to be superior to comparing the automated algorithm to the individual human generated results math>{H_1, H_2, H_3,...}</math>, and computing aggregate performance metrics.